This is bad for NASA missions

NASA projects suffer due to outdated supercomputers — This is the result of the latest report of the department's inspector general.

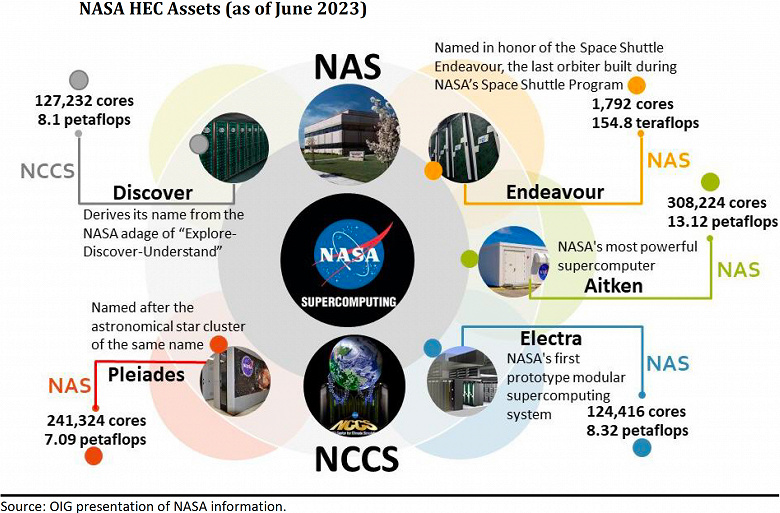

Currently, the management has at its disposal five HES systems (in the report this is synonymous with a supercomputer) with capacities ranging from 154.8 TFLOPS to 13.12 PFLOPS. And almost all of these systems rely only on CPUs, and very old ones at that. In particular, several systems have more than 18,000 processors and only 48 GPUs. These are still very powerful solutions, but modern software systems and calculations are focused primarily on GPUs.

HEC officials expressed numerous concerns about this observation, saying that the failure to modernize NASA systems could be due to various factors, such as supply chain problems, modern computer language requirements, and a lack of qualified personnel needed to introduce new technologies. Ultimately, this failure to upgrade HEC's existing infrastructure will directly impact the department's ability to achieve its research and scientific goals.

What’s interesting is that the teams of some missions, due to the lack of modernity of NASA supercomputers, solve these issues on their own, for example, by purchasing their own systems for their own needs. For example, the Space Launch System team alone spends $250,000 a year instead of waiting for access to NASA's own supercomputers.

Aggravating the situation is some confusion within NASA. Management lacks a cohesive strategy for when to use HEC assets on-premises and when to use cloud computing options. Including problems with understanding the financial consequences of each such choice.