54% of participants considered the AI to be a living person

The GPT-4 language model passed the Turing test, as stated in an article published as a preprint on Arxiv.org.

The essence of the test is simple: test participants communicate with an interlocutor via a PC, without knowing in advance whether this interlocutor is a person or a machine.

In this case, 500 people took part in the study. Each of them spoke with each of the four interlocutors for five minutes and then had to express their opinion as to whether this interlocutor was a person or a machine.

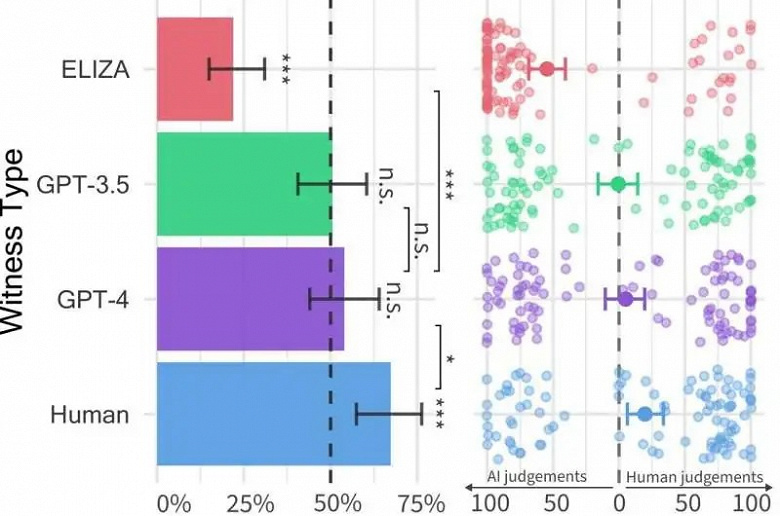

In addition to GPT-4, the test also included the GPT-3.5 model, the old ELIZA program from the 60s, and a living person. As a result, only 22% of participants considered ELIZA a human, in the case of GPT-3.5 there were already 50%, and 54% of test participants considered GPT-4 a human, which allows the authors of the study to conclude that this language model passes the Turing test. By the way, 67% of participants considered a real person to be a real person.

Participants also rated their confidence in making a decision on a scale from 0 to 100. The average confidence for each case was as follows:

GPT-4: Average confidence was 70%. GPT-3.5: Average confidence was 65% ELIZA: Average confidence was 90% Living person: Average confidence was 80%